Mind-blowing meeting of minds at Multicore World Ōtautahi

Trigger Warning : This is a bit technical lol

Last month a small group of next-generation computing experts from around the world descended for the first time on Ōtautahi, Christchurch for the 11th Multicore World conference.

I was curious to hear what a group of top technologists from household names like Microsoft, Google, Linux and top universities and computing research organisations were going to talk about in New Zealand. I was also wondering, would I understand any of it!

The beauty of the Multicore World crowd was the unique, relaxed “at home” atmosphere that organiser Nicolas Erdödy manages to create as he curates deep thinking between a room full of peers and friends. The size of the group was small enough that over the 5 days you could make connections with everyone you wanted in a “let’s share notes” or friendly “share a beer” type of way.

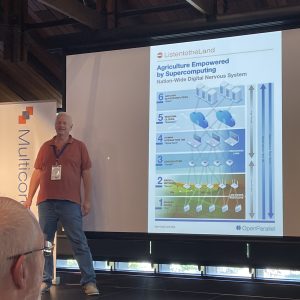

Open access to super computing

Artificial intelligence and its implications for computing infrastructure was a major consideration but not the only one. Several delegates were working towards free and open access to high performance computing for societal good. Manish Parashar’s work at the Open Science Data Federation and projects like www.nationaldataplatform.org to let anyone have access to the computer power to run large scientific research data crunching and had to fruition from investment of billions of dollars. The biggest barrier that stood out to me was that everything was thought through from the supercomputer outwards, but we know that to make anything truly accessible, you need to start with users and work the other way.

UX improvements

This was my first musing – How often do User Experience (UX) and CX experts work in the depth of supercomputing and what a difference it could make if they did. Super computers and high performance computing, underpins everything we currently do as humans, from food supply to medical research. However it is poorly understood by governments and reflected in decision making around the world. What if we demystify the backend – how could that improve better decision making?

Rapid iteration meets infrastructure

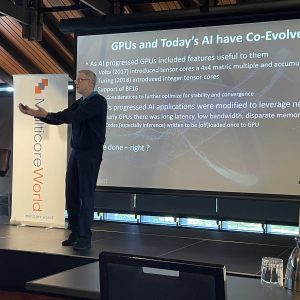

We heard about how the computing power required for artificial intelligence has increased rapidly over the last year, the way hyper-scale computing companies are responding is also changing. Previously, infrastructure had a slow and steady iterative cycle with the invention of the odd new chip or core configuration at regular intervals, whereas the applications running on top of them were subject to rapid iteration.

Messing with the algorithms

Now, rather than a one-size-fits-all approach to infrastructure, HPC (high performance computing) experts are now building different supercomputers with different algorithmic approaches to process different data for different applications. Researchers were comparing a range of research into what algorithmic and core/chip/compiler configurations would be best suited for different AI applications and it was no longer static.

Microsoft gave us a window into just how much that has sped up saying they now deploy a new supercomputer each and every week!

Hyper-scale win the race

With the rise in large enterprises like legal and accounting firms wanting to run LLMs on private networks for privacy and security reasons – some have been looking to set up their own infrastructure – there was a warning that these efforts may fall flat unless their infrastructure teams are set up to deliver in a ‘software’ style of series of agile experimental iterations as the change is exponential.

Great performance improvements come from software

There was also a fascinating presentation by Ruud Van der Pas from Oracle that compared the improvement in performance of writing better code compared with buying a better processor and all mixtures in between – you guessed it – the software engineer held just as many keys to better performance.

Core and Edge line is blurring

The theoretical concepts of edge computing (where the data is generated and collected) and core computing (the servers where the data goes back to be crunched) are also changing rapidly. This is where the conversation turned to the space/ time continuum and the physicists got excited. As the ability to beam data a long distance has gotten faster, that has meant that tradeoffs between time and distance have changed.

It doesn’t matter where

One speaker discussed the fact that the maximum distance data ever needs to travel is half a planet’s circumference e.g. from Spain to NZ or back again. As the actual time to go the maximum distance becomes negligibly no different than any shorter distance, it is no longer the most important factor where something is computed but more when and how (for example can these two things be processed non-sequentially or at the same time – if you let the computer know that you increase massively your performance.)

Computations can also be done “in the field” or at the same place the data is collected in a new and rapid way – on your phone or on a chip, in a device, capturing the data, there is increasing blurring as to what is edge and what is core. “Core” as we know it may become more and more decentralised, and potentially ‘edge’ can pick up so much processing that core is decentralised and not required.

What’s the real question?

Then we got into the area of Large Language Models (like Chat GPT) and how they are impacting the discipline of high performance computing. Andrew Jones, Lead of AI & HPC from Microsoft talked about the issues of weather prediction statistics. He told the audience that, if the forecast tells you there is a 70% chance of rain, that your brain just hears it will be raining hard tomorrow. That’s not what that statistic means at all, but it’s a jump we all make because that % information is useless. We try to make meaning out of it. What we really want is enough information to make a decision about what to wear or whether to bike.

Search engines are so 2023, we are now using answer engines like ChatGPT or Perplexity. In 2024, I am going to ask the answer engine what I really want to know, which is : What time is it gonna finish raining today? And now there is a two way opportunity. An opportunity to provide an answer, but arguably more interesting, the opportunity to change what data we are collecting based on the question.

Is it a moment from Douglas Adam’s Hitchikers Guide to the Galaxy Series? Well yes. But we need the LLM to tell us what the question is (was), because it now knows what everyone has been asking. So when will we trust the model to tell us the questions that we all collectively asked it. And can we have edge computing such as sensors that are smart enough to collect or compile new data to solve this actual questioning without human intervention? I can see this as a great opportunity to connect to blockchain oracles here (blockchain controlled data sources) – maybe it’s time to buy more LINK ( crypto for oracle data streams).

Data sovereignty doesn’t affect Americans

Data sovereignty and indigenous data sovereignty were discussed over a whole morning and an intersting panel delved deeper into different perspectives – then over morning tea people from Europe observed that Americans don’t ever experience issues with data sovereignty (except first nations people) so there isn’t a depth of feeling and imperative to solve the issues for nations outside the USA – unfortunately without that understanding, it is hard nut to crack.

The international crowd thoroughly loved the unique NZ flavour to the event – a heartfelt welcome from Ngai Tῡāhuriri and cream scones and sausages rolls for morning tea certainly helped. It was also great to see local representatives from Te Ao Matihiko, Seequent, Jade, Ngai Tahu Holdings, NIWA, MFAT, Umajin from Palmerston North – some presenting, some contributing to the discussions.

Multicore World 2025 is a must attend event

The brilliant news is that Ōtautahi Christchurch has secured Multicore World again for next year 17-21 February 2025 and I invite anyone who is interested in performance engineering, AI computing power, data science or infrastructure engineering to attend for 1 day or 5 days if you can. I made some valuable global connections and I will certainly head along next year to keep my eye on this rapidly changing field that literally underpins all technology progress.